By Perceptive Analytics

The more data you collect, the better your models, but what if the data you want resides on a website? This is the problem of social media analysis when the data comes from users posting content online and can be very unstructured. While there are some websites who support data collection from their web pages and have even exposed packages and APIs (such as Twitter), most of the web pages lack the capability and infrastructure for this. If you are a data scientist who wants to capture data from such web pages then you wouldn’t want to be the one to open all these pages manually and scrape the web pages one by one. To push away the boundaries limiting data scientists from accessing such data from web pages, there are packages available in R. They are based on a technique known as ‘Web scraping’ which is a method to convert the data, whether structured or unstructured, from HTML into a form on which analysis can be performed. Let us look into web scraping technique using R.

R Web Scraping

Harvest Data with “rvest”

R Web Scraping Package

Before diving into web scraping with R, one should know that this area is an advanced topic to begin working on in my opinion. It is absolutely necessary to have a working knowledge of R. Hadley Wickham authored the rvest package for web scraping using R which I will be demonstrating in this article.The package also requires ‘selectr’ and ‘xml2’ packages to be installed. Let’s install the package and load it first.

For a deeper look at the legal concerns, see the 2018 publications Legality and Ethics of Web Scraping by Krotov and Silva and Twenty Years of Web Scraping and the Computer Fraud and Abuse Act by Sellars. Now it's time to scrape! After assessing the above, I came up with a project. My goal was to extract addresses for all Family Dollar stores. Reading the web page into R. To read the web page into R, we can use the rvest package, made by the R guru Hadley Wickham. This package is inspired by libraries like Beautiful Soup, to make it easy to scrape data from html web pages. The first important function to use is readhtml, which returns an XML document that contains all the. Sep 07, 2020 The winning bid of $748,999 was by N.R. Acquisition, a holding company incorporated in Nevada but operating in New York. The actual scrapping subcontractor was Seawitch Salvage Inc. The company was named after the Seawitch, its gigantic open holding barge.

R Web Scraping Javascript

The way rvest works is straightforward and simple. Much like the way you and me manually scrape web pages, rvest requires identifying the webpage link as the first step. The pages are then read and appropriate tags need to be identified. We know that HTML language organizes its content using various tags and selectors. These selectors need to be identified and marked so that their content is stored by the rvest package. We can then convert all the scraped data into a data frame and perform our analysis. Let’s take an example of capturing the content from a blog page - the PGDBA wordpress blog for analytics. We will look at one of the pages from their experiences section. The link to the page is: http://pgdbablog.wordpress.com/2015/12/10/pre-semester-at-iim-calcutta/

As the first step mentioned earlier, I store the web address in a variable url and pass it to the read_html() function. The url is read into memory similar to the way we read csv files using read.csv() function.

Not All Content on a Web Page is Gold - Identifying What to Scrape

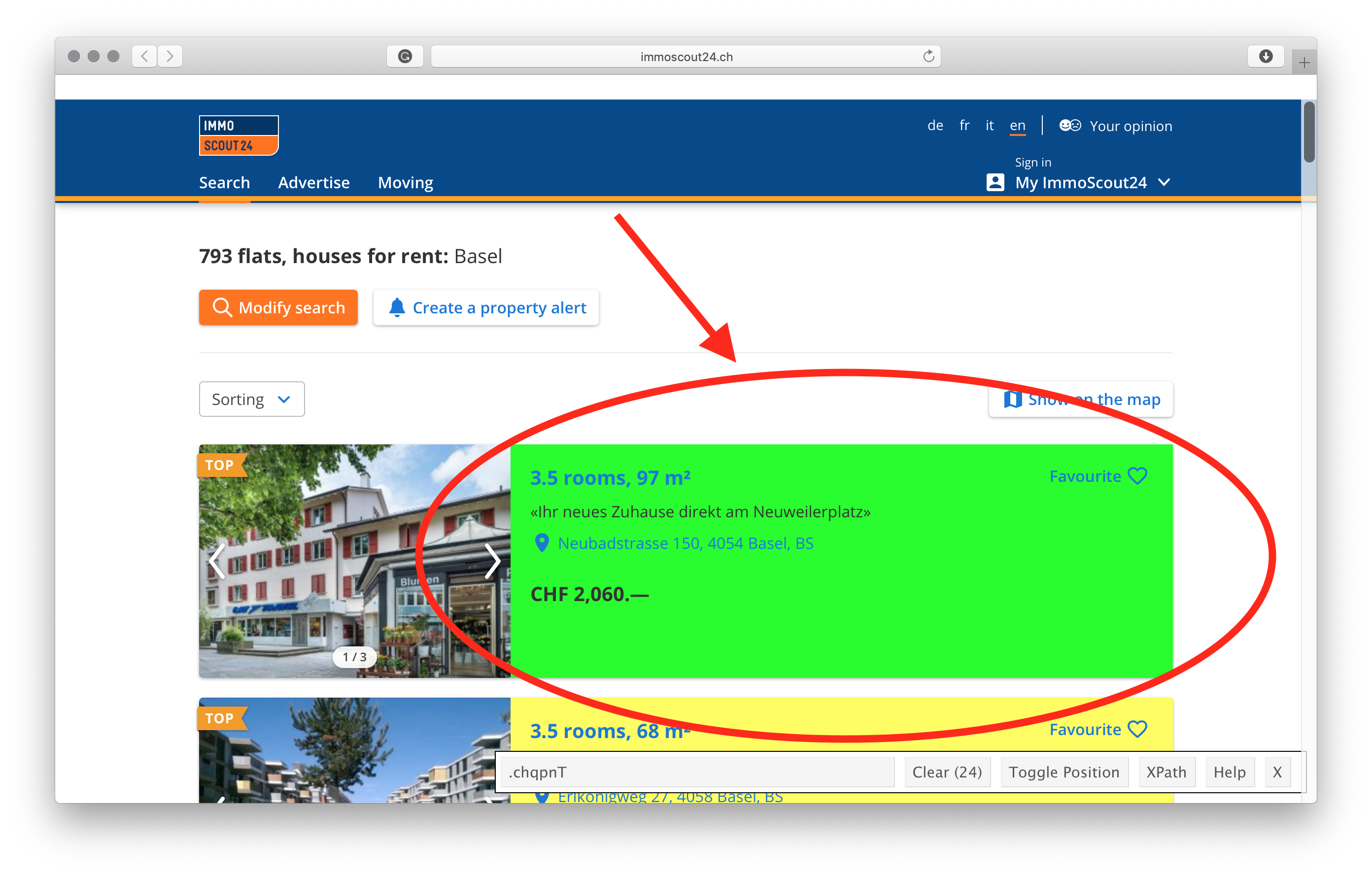

Web scraping starts after the url has been read. However, a web page can contain a lot of content and we may not need everything. This is why web scraping is performed for targeted content. For this, we use the selector gadget. The selector gadget now has an extension in chrome and is used to pinpoint the names of the tags which we want to capture. If you don’t have the selector gadget and have not used it, you can read about it using the command in R. You can also install the gadget by going to the website http://selectorgadget.com/

After installing the selector gadget, open the webpage and click on the content which you want to capture. Based on the content selected, the selector gadget generates the tag which was used to store it in HTML. The content can then be scraped by mentioning the tag (also known as CSS selector) in html_nodes() function and converting it into html_text. The sample code in R looks like this:

Simple! Isn’t it? Let’s take a step further and capture the content our target webpage!

Scraping Your First Webpage

I choose a blog page because it is all text and serves as a good starting example. Let’s begin by capturing the date on which the article was posted. Using the selector gadget, clicking on the date revealed that the tag required to get this data was .entry-date

It’s an old post! The next step is to capture the headings. However, there are two headings here. One is the title of the article and other is the summary. Interestingly, both of them can be identified using the same tag. The beauty of rvest package comes here that it can capture both of the headings in one go. Let’s perform this step

The main title is stored as the second value in the title_summary vector. The first value contains the summary of the data. With this, the only section remaining is the main content. This is probably organized using the paragraph tag. We will use the ‘p’ tag to capture all of it.

Open Source Web Scraper

1.2 Web Scraping Can Be Ugly

Depending on what web sites you want to scrape the process can be involved and quite tedious. Many websites are very much aware that people are scraping so they offer Application Programming Interfaces (APIs) to make requests for information easier for the user and easier for the server administrators to control access. Most times the user must apply for a “key” to gain access.

For premium sites, the key costs money. Some sites like Google and Wunderground (a popular weather site) allow some number of free accesses before they start charging you. Even so the results are typically returned in XML or JSON which then requires you to parse the result to get the information you want. In the best situation there is an R package that will wrap in the parsing and will return lists or data frames.

Here is a summary:

R Web Crawler

First. Always try to find an R package that will access a site (e.g. New York Times, Wunderground, PubMed). These packages (e.g. omdbapi, easyPubMed, RBitCoin, rtimes) provide a programmatic search interface and return data frames with little to no effort on your part.

If no package exists then hopefully there is an API that allows you to query the website and get results back in JSON or XML. I prefer JSON because it’s “easier” and the packages for parsing JSON return lists which are native data structures to R. So you can easily turn results into data frames. You will ususally use the rvest package in conjunction with XML, and the RSJONIO packages.

If the Web site doesn’t have an API then you will need to scrape text. This isn’t hard but it is tedious. You will need to use rvest to parse HMTL elements. If you want to parse mutliple pages then you will need to use rvest to move to the other pages and possibly fill out forms. If there is a lot of Javascript then you might need to use RSelenium to programmatically manage the web page.